Charting a Path from Redshift to Snowflake

ezCater is a data-driven company. For a long time, the principal source for our metrics was our Redshift data warehouse. As a result, we invested heavily in building out the data systems that fed Redshift. We also staffed up a team of engineers dedicated to building, enhancing, and growing our data systems. (And yes, we're hiring!)

To ingest external data into the warehouse, we make extensive use of Stitch (as detailed on their site). That gets the data into a set of staging schemas, but that's where it ends. Stitch is great, but it's not an all-in-one ETL solution. Further, we need to import a sanitized version of our production data into the warehouse as well. That's where our homegrown Ruby/Rake ETL process takes over. We copy data from a dedicated production read-replica, translate Stitch's raw imports into tables that are digestible by our data analysts, and ensure that users and permissions are set up exactly as configured.

However, we reached a scale and a level of sophistication that made Redshift less appealing than competing options. So, we made the decision to migrate to Snowflake. There was one major challenge with that choice: Snowflake unfortunately does not provide an official SDK for Ruby. Thankfully our friends over at Localytics have cleared a path for Rails with their ODBC adapter, which we figured we could modify for our use case since we use ActiveRecord to manage our database connections.

The conversion from Redshift to Snowflake is now complete, and we've decommissioned our Redshift cluster. What follows is the account of how we resolved all the roadblocks that stood in our way. If you're in a TL;DR kind of mood, feel free to scroll to the end for a quick summary.

The Journey Begins in Development

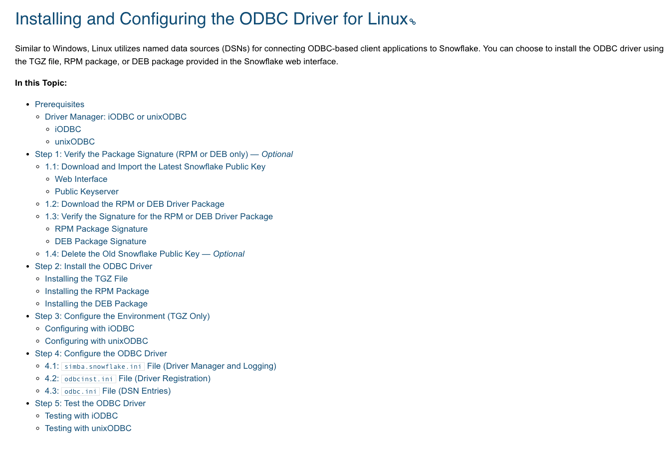

We started by looking at Localytics' blog entries and the Snowflake ODBC how-to guides to get the ETL code talking to Snowflake. Just a cursory glance at the Snowflake docs presented a daunting picture rife with dependencies on drivers, libraries, and arcane configuration parameters in INI files. Looking at the table of contents, you can see it's a (supposedly) simple five-step setup:

But let's face the facts: ODBC is 26 years old and was built for Windows 3.0. It isn't a surprise if it's a little clunky.

For speed of iteration, we started working in a local Mac development environment, installing iODBC, the Homebrew unixodbc tap, and the Snowflake driver. (Note that the library you'll need is unixodbc-dev elsewhere!)

Setting up the INI files properly proved to be frustrating, as the default error isn't all that helpful to explain what went wrong. You can see that error below:

[unixODBC][Driver Manager]Data source name not found, and no default driver specifiedCommence tearing of hair and rending of garments.

Digging into the odbc_adapter code, we noted that the odbc_connection method had an option to connect with an ODBC connection string. If dsn is in the config hash, it uses a traditional ODBC DSN lookup to pull together the configuration and connect. If it's not and conn_str is specified instead, then it uses that for a DSN-less connection. The block comment says it all:

# Connect using ODBC connection string# Supports DSN-based or DSN-less connections# e.g. "DSN=virt5;UID=rails;PWD=rails"

#"DRIVER={OpenLink Virtuoso};HOST=carlmbp;UID=rails;PWD=rails"

So we started down that path. Our connection manager class pulls the Redshift password from the environment, so it seemed like an easy patch to pull a pwd value for the Snowflake password if it was configured to talk to Snowflake. Also, since we parse out all our connection configuration from a traditional database.yml, it seemed logical to dump all the Snowflake connection parameters into a new section in there. We could then use that to build a connection string in code. Sure enough, it worked! Hurdle #1 surmounted -- we could run basic ETL code against Snowflake from a dev machine with no INI files and no password on disk. Commence celebration. 🎉

Speed Bumps

The celebration was short-lived, as our builds immediately began failing on CircleCI. The build containers didn't have unixodbc-dev installed, and thus bundler couldn't install the odbc_adapter gem. Since we didn't need it for our test suite, it was simple enough to put the gem in a production group and exclude that group with bundle install --without production.

Then, another complication crept in: the ODBC adapter supports a single SQL statement at a time. As a result, we'd also put in a query-splitter, since our ETL SQL often will batch a CREATE TABLE alongside the INSERT in the same file. Splitting on ; seemed safe, but proved troublesome for queries that legitimately had strings with semicolons in them. A little creative application of a better regex did the trick, but it would have been a bigger deal if there was any transaction management involved. Opening a transaction and then failing on a separate single statement later in the file would have needed careful error handling to roll things back.

Once we applied these fixes, it was time to replicate our dev environment success in the staging and production environments.

Onward to Production!

Our ETL currently runs on a node that's configured by Chef. In broad terms, testing Chef is easy if you use Test Kitchen -- just fire up a VM with Test Kitchen and iteratively modify your Chef cookbooks & recipes until they do what you want. In our case, it looked as though we needed to do a few things:

- Modify the

ymltemplate to add a Snowflake section - Add a recipe to set the variables that populate the template

- Add a recipe to install

unixodbc-devas a prerequisite for theodbc_adaptergem - Manually download and add the Snowflake Debian package to Chef;

dpkg -ito install it (Yes, manually. Snowflake doesn't support a simpleapt-getfrom anywhere, and a GET request to fetch the package from their website requires an auth token.)

All that went swimmingly in our production-like staging environment, but then came the dreaded "Data source name not found, and no default driver specified" errors. Lots and lots of them.

We backed off and set up INI files, using odbcinst -j to confirm that we had set up the files in the right locations with the correct values (matching what worked in development). That worked, and we re-commenced a short-lived celebration. 🎉

Having confirmed there wasn't anything about the staging environment that intrinsically prevented us from connecting, we tried the DSN-less connection again. Nothing. Our Google searches didn't help either, since there weren't any specific keywords we could use to hone in on our particular issue. So we dove into some C code spelunking, noting that the trail kept dead-ending at this line in the Ruby ODBC driver:

connection = ODBC::Database.new.drvconnect(driver)We pulled down the ruby_odbc code and looked for "drvconnect" to get more context into what that function was looking for. That search led to the dbc_drvconnect function, which in turn allocates a connection object, connects, and then deallocates the connection. The connection function is SQLDRIVERCONNECT, which is a #define for SQLDriverConnect. Finding no further mention of that, it was apparent that SQLDriverConnect was a function in the underlying unixODBC library.

The spelunking continued. Sure enough, we found release notes on unixodbc.org mentioning numerous changes to that function, including a enhancement in version 2.3.1 regarding command-line flags for isql allowing a DSN-less connection -- and this interesting comment:

Allow setting the DM overrive [sic] values in the connection string to SQLDriverConnect for example "DRIVER={Easysoft ODBC-SQL Server};Server=myserver;UID=user;PWD=pass;DMStmtAttr=SQL_QUERY_TIMEOUT=10;"

Running odbcinst -j one more time, it became clear. On Ubuntu Trusty, the OS on our staging box, the version of the unixodbc library is 2.2.14. The version installed by Homebrew is 2.3.6, the latest. We should've seen that one before. Facepalm.

Trusty's unixodbc-dev is about 10 years old. Upgrading to Xenial would pick up version 2.3.1, but even that is about 7 years old. The latest security fix had been made in March 2018 with version 2.3.6, so that seemed to be the version we'd want. So, amongst the available options, we chose to build our own 2.3.6 binaries, package them, and then dpkg -i them with Chef since we're already doing that with the Snowflake package.

And wouldn't you know, once we did that, everything worked exactly as intended the first time.

Celebration. And naptime. 🎉

Lessons Learned about Using a DSN-less ODBC Connection

- Get the right library versions, even if it means compiling it yourself

- Explicitly include the initializer if you're not using Rails

- The ODBC infrastructure described here doesn't handle concurrency seamlessly, so:

- Synchronize creating your connection manager, if you have one

- Synchronize opening a connection, if you run concurrently

The TL;DR Version

Setting up a DSN-less Snowflake Connection with Ruby/ActiveRecord

We have encapsulated the following configuration into an internal gem which we hope to open source, but for now here are the steps to get you going:

-

Install everything. This includes the

unixodbc-devsystem library (unixodbcin Homebrew), the Snowflake driver, and theodbc_adaptergem (which depends onruby-odbc). You must install a version ofunixodbcthat is >= 2.3.1. -

Create a Snowflake ODBC initializer, as detailed in the Localytics blog. Add the necessary

requirestatements in your code, since you won't be able to rely on Rails magic. - Construct a connection string by concatenating your config key=value pairs using semicolons. You'll need this in the next step to set the value for the

conn_strkey in the config hash. Snowflake lists the required and optional connection parameters on their site. Remember not to specify adsnkey or else the ODBC adapter will assume it should look for INI files! -

Add the appropriate configuration to ActiveRecord. We read ours from our

database.yml, but it boils down to something like this once you add in the connection string:

-

Connect and do things! For example: